Researchers used over 1,700 photos of students and others without their permission for a facial recognition study sponsored by US military and intelligence services, according to the Colorado Springs Independent and Financial Times. While technically legal, it has raised questions about privacy around facial recognition tech, especially considering how the photos might end up being used. "This is essentially normalizing peeping Tom culture," the Electronic Frontier Foundation's David Maas told CSIndy.

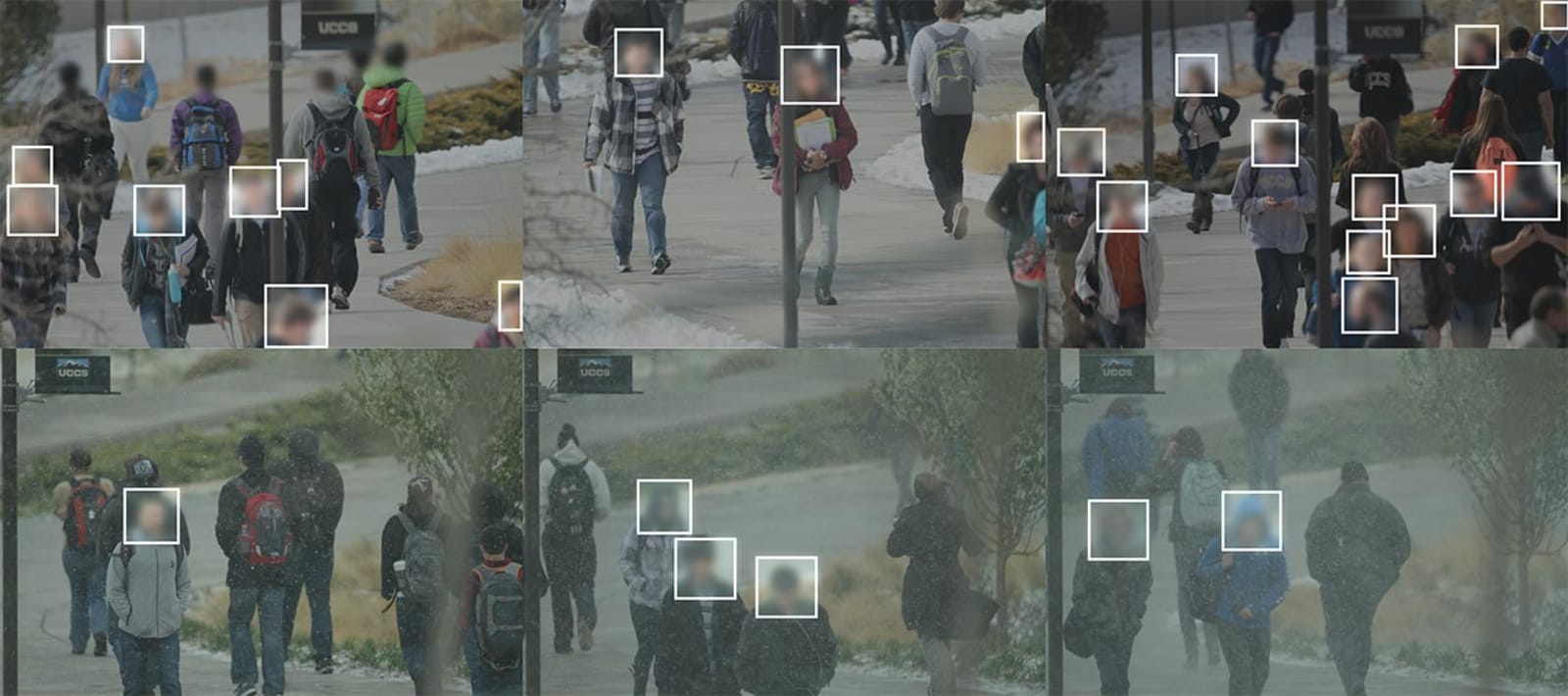

The study, carried out in 2012 and 2013, was made to determine, in part, if algorithms could identify facial features from a long distance away, through obstacles and in poor light. A telephoto camera was set up at a distance of about 150 meters away from a public area with a lot of foot traffic. Most of the subjects were looking away from the camera, with many looking a their phones.

After researchers carefully combined the photos, they created a dataset called "Unconstrained College Students." Since it's more random and less detailed than other databases, it could help scientists develop algorithms that work from farther away. That could be particularly useful to the military, for instance, by helping them see if an approaching boat was a friend or foe.

The professor who conducted the study, Dr. Terrence Boult, said that acquiring images of people in public places is not illegal, and the study was cleared by the school.

"The research protocol was analyzed by the UCCS Institutional Review Board, which assures the protection of the rights and welfare of human subjects in research," wrote University spokesperson Jared Verner in a statement. "No personal information was collected or distributed in this specific study. The photographs were collected in public areas and made available to researchers after five years when most students would have graduated."

Still, it seemed the measures they took weren't enough. Boult notes that the study was initially made available to corporate researchers, but was later pulled because the Financial Times article included the dates and times the photos were taken, jeopardizing anonymity. "They gave out more information in the article than we had intended," Boult told the Denver Post.

Still, the study will do more good than harm, according to Boult. "If police use them and they match the wrong person, that's not good," he said. "Our job as researchers is to balance the privacy needs with the research value this provides society, and we went above and beyond what was required."

Facial recognition is increasingly becoming a hot button topic, of course. The city of San Francisco recently banned the systems, and Uber is facing claims of racism over its driver recognition system. And critics of the study note that the subjects may not be thrilled with how the data is eventually used. "He may be helping them do something that's not right in the first place," said Denver University's Bernard Chao.

Via: Financial Times

Source: UCCS, Colorado Springs Independent

Tech

via https://AIupNow.com

, Khareem Sudlow