Avi Bar-Zeev spent nearly 30 years working on AR/VR/MR, helping companies like Disney, Microsoft, Amazon, and Apple make decisions about how to unlock the positive potential of new technologies while minimizing harm. Bar-Zeev helped start the original HoloLens project at Microsoft. Nothing in this article should be read as disclosing unannounced products or ideas from those companies.

Humans have evolved to read emotions and intentions in large part through our eyes. Modern eye-tracking technology can go further, promising new wonders in human-computer interaction. But this technology also increases the danger to your privacy, civil liberties, and free agency.

Bundled into VR headsets or AR glasses, eye-tracking will, in the near-future, enable companies to collect your intimate and unconscious responses to real-world cues and those they design. Those insights can be used entirely for your benefit. But they will also be seen as priceless inputs for ad-driven businesses, which will learn, model, predict and manipulate your behavior far beyond anything we’ve seen to date.

I’ve been working to advance Spatial Computing (also known as AR, VR, MR, XR) for close to 30 years. Back in 2010, I was lucky enough to help start the HoloLens project at Microsoft. The very first specs for that AR headset included eye-tracking. My personal motivation was a vision for the next great user interface, one that dispenses with “windows” and “mice,” to let humans act and interact naturally in our 3D world.

I am happy to see some form of these ideas included in the HoloLens v2, and also Magic Leap and Varjo headsets, to name a few. I’ve seen articles hailing the coming cornucopia of eye-tracked user-data for business. The gold rush to your eyes is just beginning. But there’s an important part of the conversation that is still largely absent from public view:

How can we foster the best uses while minimizing harm?

Now is the time to begin building awareness and expectations. Eventually, I anticipate companies will have to concede to a progressive government somewhere in the world (most likely in Europe) that eye data is like other health or biometric data, meaning it must be secured and protected as least as well as your medical records and fingerprints.

How Eye Tracking Works

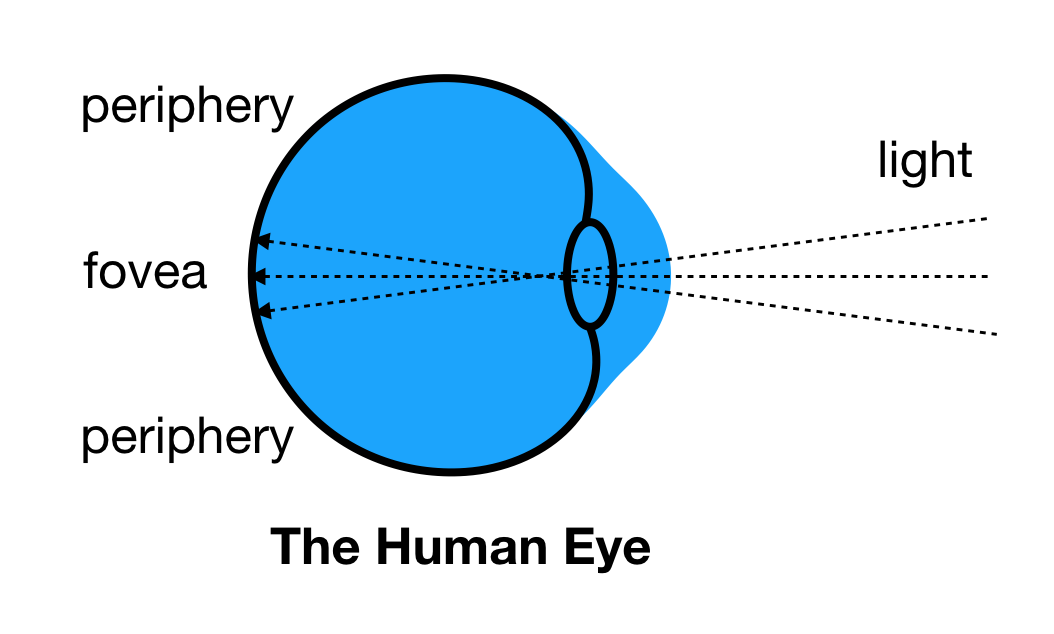

Our retinas sit in the back of our eyes, turning the light they receive into signals our brain can interpret, so that we can “see.” Our retinas have several specialized areas, evolved to help us survive.

The highest-resolution parts, called “foveae,” cover only a small patch of each retina, reaching about five degrees from center. Most of the remainder is called “peripheral vision,” going out to about 220 degrees across both eyes. The peripheral areas are best at seeing motion and key patterns like horizons, shadows and even other pairs of eyes looking back at us. But in the periphery, surprisingly, we see very little color or fine detail.

All of the words on this page hopefully look sharp. But your brain is deceiving you. You’re really only able to focus clearly on a word or two at any given time.

To maintain the illusion of high detail everywhere, our eyes dart around, scanning like a flashlight in the dark, building up a picture of the world over time. The peripheral detail is mostly remembered or imagined.

Those rapidly darting eye motions are called “saccades.” Their patterns can tell a computer a lot about what we see, how we think, and what we know. They show what we’re aware of and focusing on at any given time. In addition to saccades, your eyes also make “smooth pursuit” movements, for example while tracking a moving car or baseball. Your eyes make other small corrections quite often, without you noticing.

Computers can be trained to track these movements. The most common eye-tracking approach uses one or more tiny video cameras pointed closely at your eyes. Computer vision software processes the video in real-time to find your pupils, computing a direction for each eye. Various methods add infrared light from tiny LEDs nearby, whose reflections are captured by the cameras. This works because your eyes bulge out more around the cornea. The reflections, or “glints,” will slide around predictably. Other approaches to eye tracking measure the electrical signals from the muscles that drive the eyes. Yet other methods precisely measure the distance from the sensor to the surface of the eye, again relying on that natural bulge.

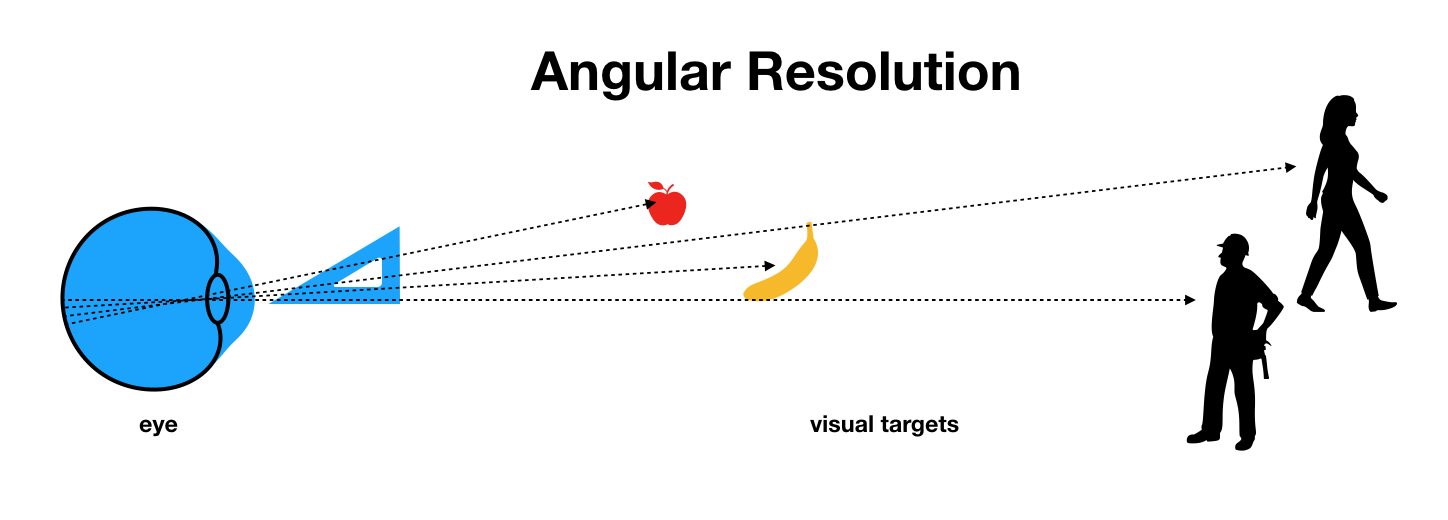

The better systems are able to distinguish between two or more visual targets that are only half a degree apart, as measured by imaginary lines drawn from each eye. But even lower-precision eye-tracking (1 - 2 degrees) can help infer a user’s intent and/or health. Size, weight, and power requirements for eye-tracking hardware & software place additional limits on consumer adoption.

If you temporarily suppress your own saccades, as in the above experiment, you can begin to understand how limited our peripheral vision really is. By staring at the central dot, your brain is unable to maintain the illusion of “seeing” everywhere. Your memory of the periphery starts to quickly drift, causing a distorted appearance for even the “world’s sexiest” people.

It turns out that your eyes can also be externally manipulated to a surprising degree. Magicians use this to distract you from their sleight of hand. Since saccades help us take in new details, any motion in our local environment may draw our gaze. In the wild, detecting such motion could be vital to survival. More interestingly, our eyes may naturally follow the magician’s own gaze, as if to see what they see, following normal human social cues (aka. “joint attention”).

Luring our eyes is also useful for storytelling, where movie directors can’t aim and focus us like a camera. They count on us seeing what they intend us to see in every shot.

Visual and UI designers similarly design websites and apps that try to constructively lead our gaze, so we might notice the most important elements first and understand what actions to take more intuitively than if we had to scan everywhere and build a map.

Even more remarkably, we are essentially blind while our eyes are in saccadic motion (the world would otherwise appear blurry and confusing). These natural saccades happen about once a second on average. We almost never notice.

During this frequent short blindness, researchers can opportunistically change the world around us. Like magicians, they can swap out one whole object for another, remove something, or rotate an entire (virtual) world around us. If they simply zapped an object, you’d likely notice. But if they do it while you blink or are in a saccade, you most likely won’t.

That last bit may be useful for future Virtual Reality systems, where you are typically wearing an HMD in an office or living room. If you tried to walk in a straight line in VR, you’d likely walk into a table or wall IRL. “Guardian” systems try to prevent this by visually warning you to stop. With “redirected walking,” you’d believe that you’re walking straight, while you are actually being lured on a safer cyclical path through your room, using a series of small rotations and visual distractors inside the headset. This next video demonstrates how it works.

In this next “real life” example, we can see the experimenter/host literally swaps himself out for someone else, mid-conversation, on a public street. With suitable distractions, the subject of the experiment doesn’t notice. The bottom line is that we see much less than we think we do.

The Best Uses of Eye Tracking

There are enough positive and beneficial uses of eye-tracking to warrant its continued deployment, with sufficient safeguards.

For starters, eye tracking promises to fundamentally increase the capabilities and lower the energy requirements, cost, and weight of new AR & VR systems. The goal is (like the actual retina) to provide high resolution only where you are looking.

Eye-tracking is also very promising for natural user input (NUI). If we know what you’re focusing on, we can show the most relevant UI actions instead of popping up complicated menus or asking you to search a grid of buttons with hand-held controllers. For example, if I look at a lamp, the two most likely actions are to turn it on or off.

The potential for eye-tracking to feel like mind-reading is impressive. One of the patents we filed at Microsoft was for glasses that could change their prescription from near to far based on what you’re looking at, say a phone or a street sign. The Magic Leap headset switches between “near” and “far” focus planes for its AR content. The ideal everyday glasses would dial in your prescription for the real world according to your current “focus.”

More superpowers have been widely discussed. For example, another patent we filed in 2010 included the idea that your glasses could detect that you were trying to read something farther away and magnify the view for you.

The most common use of this technology today is to study and improve marketing decisions. The typical scenario has a test subject wearing an eye-tracking device in a real location like a supermarket. A researcher records how the subject’s eyes move across the shelves to determine how, what, and when that subject notices various product details. It’s no wonder that people want to now recreate this scenario in VR to achieve faster results with greater control of the variables.

This use of technology would be particularly invasive, if the test subjects didn’t first consent to how the data was being collected, used and retained. With informed consent and proper data handling, it’s within ethical bounds. The bottom line of informed consent is that it requires genuine understanding by the test subject or participant. Clicking a button on a form is not informed consent, especially if we might only skim the text.

Eye-tracking may also be useful for diagnosing autism in young children, as the patterns of eye motion seem to deviate from neurotypical kids. Autistic kids may scan their environment more and fixate less on faces. Eye tracking may be able to help address some of the cognitive differences we find as these amazing kids grow into adults.

Some diseases may be diagnosed by measuring saccadic motion and pupil response, including schizophrenia, Parkinson’s, ADHD and concussions. This is Star Trek tricorder territory.

Level of Interest

There is a growing body of research to indicate that pupil-dilation, directly measurable by many eye-tracking approaches, can indicate the level of interest, emotional, sexual or otherwise, in what one is looking at.

Like a camera’s aperture, the human iris primarily serves to regulate the amount of light hitting the retina. But, after controlling for these light levels, the size of the pupil and its rate of change tells the story of a person’s inner emotional and cognitive states. Pupil dilation is an autonomic reflex, meaning it is very difficult to consciously control.

Recall the “Voight-Kampff” test from PK Dick’s Blade Runner. With current eye tracking technology, it’s not that far from reality, except artificial humans might be more easily designed to pass the “empathy” test, whereas human sociopaths might fail miserably.

Eye-gaze becomes even more revealing when coupled with other sensors, like EEG for brain waves (revealing states of mind), galvanic skin response (anxiety, stress), pulse and blood pressure sensors (anger, excitement). Those sensors can generally reveal your present emotional state, but not the external triggers. Eye-gaze can reveal a fair amount of both.

There are even better uses of eye tracking currently being researched in various places around the world. This is still early days.

The Dark Side of Eye Tracking

Some VR companies already collect your body-motion data. This can help them study and improve their user experience. I’ve worked on projects that did the same, albeit always with informed consent. But there’s a danger to us, and to these companies, that this data could be used improperly. And by not sharing exactly what's being saved, how it’s being stored, protected and used, we’d never know.

They say “trust us,” and we collectively ask “why?”

Anonymity and Unique Identifiers

Machine-learning algorithms can uniquely identify you from your movements in VR (e.g., walk, head and hand gestures). Dr. Jeremy Bailenson of Stanford VR has written about how these kinds of algorithms can be used both for and against us. It seems that the patterns of your irises and blood vessels in your retinas are even more unique.

Regardless of how you are most easily identified, if a company retains both your real identity (e.g., name, credit cards, SSN) and your personally-identifying biometric data, then all promises of anonymity are temporary at best. Re-identifying you becomes trivial.

Microsoft has made a good case for its Iris-ID to positively identify you in HoloLens2. Biometric systems typically begin by computing a “digital signature” instead of storing raw images. If the signature of the current eyes matches the recorded signatures, then you are you. Assuming your iris signature is unique and remains protected, this is better than passwords.

The best security practice I’m aware of is to store these signatures on device, in a hardware-enforced encrypted “vault.” A determined hacker would have to steal and crack your physical device, which could be a slow and painful process. This makes exploits, especially mass exploits, more difficult. Optionally copying this data to the cloud could add some user convenience (e.g., system backup & restore, cross-device logins). But it could make it easier for someone to obtain your signatures and “replay” them in your absence to impersonate you.

Resetting passwords is easy. Changing your eyes is hard. Just one breach is enough to ruin things for life. So any company on this path should use the strictest possible security.

Data Mining

Microsoft and Magic Leap both make it relatively easy for third party developers to build apps that use eye-gaze data from their devices. This is exciting for developers exploring new interaction paradigms, but it also represents a serious privacy concern.

Microsoft declined to comment for this article. Magic leap did not respond to a request for comment. An Oculus spokesperson responded: “We don’t have eye tracking technology in any of our products shipping today, but it is a technology that we are exploring for the future."

Let’s assume our AR devices have front-facing cameras to “see” what you see, and AI to classify, recognize, and categorize the object or person you’re looking at (see Google Cloud AI, Amazon Rekognition, Azure CV services). Similar concerns apply for pure VR devices, but the virtual objects in the scene are already known.

A third party developer or a hardware manufacturer themselves could presumably capture how you gazed at anything; whether you passed over, pondered, fixated, or looked away quickly; perhaps even whether you got interested, excited, embarrassed, or bored (perhaps using pupil dilation). They could learn your sexual orientation, by measuring your attention patterns when looking at various other people. They could discover which friends or co-workers you feel emotions for (e.g., interest, attraction, frustration, even jealousy), what specific physical features you’re attracted to (where did you look?), your confidence level (did you quickly look away?) and even your relationship status (does your heart beat faster?). Pulse rate, for example, might be gleaned from the same eye-facing cameras. Additional sensors could add more insights.

Such a company could learn how you regard any visually-represented idea: cars, books, drinks, food, websites, political posters and so on. They would know what you like, hate, or simply don’t pay attention to.

Eye-tracking represents an unconscious “like” button for everything

Your eye’s movements are largely involuntary and unconscious. If a company is collecting the data, you won’t know. The main limitation on these activities today is the fact that AR headsets are not yet ready to be worn all day, everywhere. That will no doubt change with time. Will security practices stay ahead of the adoption curve?

One easy mitigation would be share eye-gaze data with an app only when a user is looking at that app. This is not a complete solution, and one should consider not sharing this raw data at all. Of course, even an irresponsible company might promise to never keep or share the raw data, while still retaining the higher-level derived data, which is more valuable.

This “metadata” is essentially the rich behavioral model or “psychographic profile” of how you will likely respond to various cues. It represents a semantic graph of your personality and your predicted future behavior. It’s the closest thing we can imagine to your digital self.

If you doubt this outcome, take a few moments to watch this video about current threats. Tristan Harris, former design ethicist at Google and co-founder of the Center for Humane Technology, refers to these behavioral models as “voodoo dolls” to the extent they can remotely control you.

This rich behavioral model is used to both keep you coming back, and also to get the ideal ads in front of you. We know that an ad that leads to a verified transaction is several orders of magnitude more valuable than one that doesn't. Just proving that an ad created an impression at all is valuable, given all the noise. There are billions of dollars riding on this.

Extrapolation

Have you ever searched for a product on Google and then seen ads about it for days or weeks? Have you ever seen those same ads continue to appear even after you bought said product?

This happens because Google doesn’t yet know that you made a decision, bought the product, or that you may not still be interested (e.g., was it a gift?).

An eye-tracking AR device provider would likely know all of these things in real-time. “Closing the loop” means knowing how well a given ad impression succeeded. It means knowing enough about you to show you the ads that will be most timely and effective for you. Such a company would have even more opportunities to make money from your choices — and your indecision — by effectively serving up these insights and impulses to those who want to sell you things, at the exact right time and place.

Experimentation

It’s worth pointing out that advertising is not inherently evil. Some ads make you aware of products or ideas you might actually like, thus providing a mutual benefit. But enough advertising today is already manipulative and misleading, albeit in a grossly inept kind of way. The old joke is that half of every ad budget is wasted, but no one knows which half. With eye tracking, marketers can find that out.

Let’s say a company not only collects your intrinsic responses via your eyes, but also decides to actively experiment on you to trigger those responses on demand. Consider what Facebook got caught doing in 2014, after manipulating social news feeds to measure user reactions.

Would you notice if a stranger were suddenly swapped out for someone else? Consider the video above where people don’t notice the host being swapped out in the middle of a conversation. Consider the (well-intentioned) “redirected walking” research, which used visual distractors to draw one’s gaze. They effectively hid how they changed the world. With eye-tracking, AR, and VR, those techniques can be used against you just as easily.

Experiment-driven content will be embedded in the world, as props, background, or even objects of attention. Some companies will measure and record our unconscious emotional responses for all of it. For AR, this means placing virtual objects in the world as well as classifying whatever else we look at. Websites today run what they call “A/B” experiments, where they try different content or features for different sub-populations to compare results.

But here, given our blindness during blinks and saccades, a clever algorithm could repeatedly swap things around you, in real-time, testing your A/B reactions iteratively. If you tried to foil this by memorizing some of what you see, the algorithm could likely detect your current focus and change something else instead.

In a short time, the experiment-driven profile is an order of magnitude more detailed than the strictly ad hoc learning. You have been mapped and digitized.

Emotional Responses

Take a moment to imagine something that made you feel envious, regretful, angry, or happy. It could be something from your social life, family, work, politics, or any media that really moved you. Perhaps it’s a song lyric that perfectly matched your mood. The specific details are likely different for everyone. What matters is whether an emotional trigger moved you to do something.

Now imagine that natural synchronicity, this time coming from an explicit and hidden directive to motivate you to buy something, vote for or against someone, or just stay home. What if they learned exactly what, when, and how to play the right emotion for best results?

When we’re responding emotionally, we lose the veil of rationality and we are most easily manipulated. Someone just needs to know what buttons to push for each of us and then measure, score, and optimize the results over time, closing the loop.

The bottom line is that companies that live and die from ad revenue will need to keep growing their profits to keep their investors happy. The two most obvious ways they can do so are to a) add more users, or b) make more money from each user.

Eventually, without corrective forces, these companies will make decisions that lower their own safeguards. It’s the business model, not the ethics of employees, that matters most.

We are rightly concerned about insurance companies knowing our genetic susceptibilities. They would likely try to deny coverage or charge more for people who are more likely to get sick. We are rightly concerned about our health data remaining private against mining and abuse by prospective employers, governments, or in social situations.

Why would we be any less concerned here, when lucrative psychological information could be used against us and without our ability to say no?

In The Matrix, fictional AIs would occasionally alter the virtual world to further their oppressive goals. But even the Agents didn’t use the more sophisticated techniques we’re discussing here. In real life, people won’t be used as batteries. We’ll more likely be plugged in as abstract computational nodes, our attention and product-buying decisions channeling the flow of trillions of dollars of commerce; our free will up for grabs to the most ‘persuasive’ bidders.

Some people will read these ideas and salivate over the money-making and political potential of this new eye-tracked world. There could be many billions of dollars made.

Others may read this and demand protections today. This is exactly the kind of thing that often requires government intervention.

But still, a few people wisely ask: “what can I do to help?”

The easiest way to avoid legislation or undue pain is for us to live by a certain set of rules regarding eye-tracking, and related health and biometric data. These rules don’t prohibit most good uses, but they may help curtail the bad ones.

Plan A: Let’s Do This The Easy Way

Here’s a starter set of some policies that almost any company could live by:

- Eye-tracking data and derived metadata is considered both health and biometric data and must be protected as such.

- Raw eye data and related camera image streams should neither be stored nor transmitted. Highly sensitive data, like Iris-ID signatures, may be stored on each user’s device in a securely encrypted vault, protected by special hardware from hacking and spoofing. Protect this at all costs.

- Derivatives of biometric data, if retained, must be encrypted on-device and never transmitted without informed consent. There must be a clear chain of custody, permission, encryption, authentication, authorization and accounting (AAA) wherever the data goes (ideally nowhere) . This data should never be combined with other data, without additional informed consent and the same verifiably high security everywhere.

- Apps may only receive eye-gaze data, if at all, when a user is looking directly at the app, and must verifiably follow these same rules.

- Behavioral models exist solely for the benefit of the users they represent. The models must never be made available to, or used by, any third parties on or off device. It would be impossible for a user to properly consent to all of the unknown threats surfaced by sharing these models.

- EULAs, TOS, and pop-up agreements don’t provide informed consent. Companies must ensure that their users/customers are clearly aware of when, why and how their personal data is being used; that each user individually agrees, after a full understanding; and that the users can truly delete data and revoke permissions, in full, whenever they wish.

- Don’t promise anonymity in place of real security, especially if anonymity can later be reversed. Anonymization does not prevent manipulation. Bucketing or other micro-targeting methods can still enable the more manipulative applications of sensitive user data in groups.

- Users must be given an easy way to trace “why” any content was shown to them, which would expose to sunlight any such targeting and manipulation.

I’m calling on all companies developing Spatial Computing solutions to thoughtfully adopt principles like these and then say so publicly.

If you work for one of these companies, just imagine having to recall your great new XR hardware that has too-lax protections for sensitive user data in progressive countries. Imagine having a public class action lawsuit haunt you for years, especially when you were aware of the issues but took no action.

Mitigating this up-front will be cheaper by comparison. The principles above are designed to allow companies to continue to compete and differentiate yourselves, while providing a floor that we must not go below.

So please have those meetings to debate these issues. Ask me or any other privacy + XR + AI expert to answer any questions you might have about the various threats and solutions.

For consumers, I’d first advise you to share this article (or anything better) to ensure your friends are at least as well-informed as you are. Spread the word. Get others interested now, before this gets twisted in politics and becomes a giant hairball.

Second, you might decide, like me, to strictly avoid using any product that doesn’t clearly and verifiably follow these protections. The danger is that once your data is recorded and used without your consent, you have lost control forever. It’s not worth the risk.

Third, help get the companies who make the relevant products more aware of these issues. Tell them that you do indeed care and that you are indeed watching. Most companies just need to know that we’re voting with our pocketbooks and they will take the path of least resistance.

Plan B: “They took the hard way…”

Failing these voluntary steps leads to almost certain regulation. Eventually, at least one company goes too far, gets caught, and everyone suffers.

Regulation is there to set limits on bad behavior and level the playing field, so the majority of conscientious companies and their customers aren’t disadvantaged by the few abusers.

Plan B is to pursue regulatory protections around the world. HIPPA already protects health information in the USA, at significant cost. Eye data is arguably health data, as it will be used for diagnosis of physical and mental health concerns. GDPR also seems to do some good, with fairly minimal pain. More will be needed.

We may reason that our “digital selves,” in the form of predictive behavior models, are arguably more like people (e.g. clones) than any corporation ever was. These “digital selves” should never be owned or traded by third parties. They may only exist as digital extensions of ourselves, with the same inalienable rights as human beings.

Regulation requires a broad understanding of the issues and processes, such that governments take action at their usual pace, if not faster. It could take several years, but there is some precedent. The downside is that regulation often slows innovation and ties the hands of those doing good as well. But it may indeed be required in this case.

Plan C: For Anything Else We Didn’t Think Of…

Plan C is the most speculative, but worth following to see where it leads. Some companies may not heed the call for self-policing. Legislation may be slow or get derailed for any number of reasons, including that the “good side” is really busy. However, the same ideas in this article that put us at risk could be used to protect us, proactively, via some future private enterprise.

Consider a new service, your Personal Protector, that collects the same private data and builds up the same robust model of your personality over time. It’s your digital self, but now it’s on your side and it works for your direct benefit.

We could take these agents and put them to work as our external “guardian angels” or “early warning systems.” If any ad-driven company wants to exploit us, a personal defender would be on the front line to detect the manipulation. It could respond as we would prefer, vs. how we might act without thinking. If a company wants to market to us, this defender could “consume” such distractions and spit out just what we want and need to know. It would safely put any exploitive company in a tiny box, providing most of their benefits, without the harm.

It reminds me of Ad Block software used in browsers today. And, as with Ad Block software, it’s hard to imagine how the ad-driven companies would continue to make money in this situation, except to try to block or outlaw these protections or beg us to turn them off.

But it’s also hard to feel sorry for these ad-driven companies in this scenario. They chose greed over the wishes and interests of their customers. And that’s simply not sustainable long-term.

The risk, of course, is that the enterprising company that best solves this problem in the most humane way up front, the one that earns our trust, could itself become a primary threat. Corporate principles can change when companies are acquired or founders or board members are replaced over time. Such a concentration of power over us is never inherently safe.

This may all seem either too scary or too far away for most people to worry about. We’re living in a time of significant change, and any of the other dozen existential worries we carry around could feel more real right now. However, for this particular problem, we have the means, motive, and opportunity to steer things in a better direction. So why wouldn’t we do everything possible?

Acknowledgements: I want to thank few wise people for their early feedback and general insights on this work: Helen Papagiannis, Matt Meisneiks, Silka Meisneiks, Dave Lorenzini, Trevor Flowers, Eric Faden, David Smith, Zach Bloomgarden, Judith Szepesi, Dave Maass, and Jeremy Bailenson.

420GrowLife

via https://www.aiupnow.com

Avi Bar-Zeev, Khareem Sudlow