Generative adversarial networks, or GANs for short, are an effective deep learning approach for developing generative models.

Unlike other deep learning neural network models that are trained with a loss function until convergence, a GAN generator model is trained using a second model called a discriminator that learns to classify images as real or generated. Both the generator and discriminator model are trained together to maintain an equilibrium.

As such, there is no objective loss function used to train the GAN generator models and no way to objectively assess the progress of the training and the relative or absolute quality of the model from loss alone.

Instead, a suite of qualitative and quantitative techniques have been developed to assess the performance of a GAN model based on the quality and diversity of the generated synthetic images.

In this post, you will discover techniques for evaluating generative adversarial network models based on generated synthetic images.

After reading this post, you will know:

- There is no objective function used when training GAN generator models, meaning models must be evaluated using the quality of the generated synthetic images.

- Manual inspection of generated images is a good starting point when getting started.

- Quantitative measures, such as the inception score and the Frechet inception distance, can be combined with qualitative assessment to provide a robust assessment of GAN models.

Discover how to develop DCGANs, conditional GANs, Pix2Pix, CycleGANs, and more with Keras in my new GANs book, with 29 step-by-step tutorials and full source code.

Let’s get started.

How to Evaluate Generative Adversarial Networks

Photo by Carol VanHook, some rights reserved.

Overview

This tutorial is divided into five parts; they are:

- The Problem of Evaluating GAN Generator Models

- Manual GAN Generator Evaluation

- Qualitative GAN Generator Evaluation

- Quantitative GAN Generator Evaluation

- Which GAN Evaluation Scheme to Use

The Problem of Evaluating GAN Generator Models

Generative adversarial networks are a type of deep-learning-based generative model.

GANs have proved to be remarkably effective at generating both high-quality and large synthetic images in a range of problem domains.

Instead of being trained directly, the generator models are trained by a second model, called the discriminator, that learns to differentiate real images from fake or generated images. As such, there is no objective function or objective measure for the generator model.

Generative adversarial networks lack an objective function, which makes it difficult to compare performance of different models.

— Improved Techniques for Training GANs, 2016.

This means that there is no generally agreed upon way of evaluating a given GAN generator model.

This is a problem for the research and use of GANs; for example, when:

- Choosing a final GAN generator model during a training run.

- Choosing generated images to demonstrate the capability of a GAN generator model.

- Comparing GAN model architectures.

- Comparing GAN model configurations.

The objective evaluation of GAN generator models remains an open problem.

While several measures have been introduced, as of yet, there is no consensus as to which measure best captures strengths and limitations of models and should be used for fair model comparison.

— Pros and Cons of GAN Evaluation Measures, 2018.

As such, GAN generator models are evaluated based on the quality of the images generated, often in the context of the target problem domain.

Want to Develop GANs from Scratch?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Manual GAN Generator Evaluation

Many GAN practitioners fall back to the evaluation of GAN generators via the manual assessment of images synthesized by a generator model.

This involves using the generator model to create a batch of synthetic images, then evaluating the quality and diversity of the images in relation to the target domain.

This may be performed by the researcher or practitioner themselves.

Visual examination of samples by humans is one of the common and most intuitive ways to evaluate GANs.

— Pros and Cons of GAN Evaluation Measures, 2018.

The generator model is trained iteratively over many training epochs. As there is no objective measure of model performance, we cannot know when the training process should stop and when a final model should be saved for later use.

Therefore, it is common to use the current state of the model during training to generate a large number of synthetic images and to save the current state of the generator used to generate the images. This allows for the post-hoc evaluation of each saved generator model via its generated images.

One training epoch refers to one cycle through the images in the training dataset used to update the model. Models may be saved systematically across training epochs, such as every one, five, ten, or more training epochs.

Although manual inspection is the simplest method of model evaluation, it has many limitations, including:

- It is subjective, including biases of the reviewer about the model, its configuration, and the project objective.

- It requires knowledge of what is realistic and what is not for the target domain.

- It is limited to the number of images that can be reviewed in a reasonable time.

… evaluating the quality of generated images with human vision is expensive and cumbersome, biased […] difficult to reproduce, and does not fully reflect the capacity of models.

— Pros and Cons of GAN Evaluation Measures, 2018.

The subjective nature almost certainty leads to biased model selection and cherry picking and should not be used for final model selection on non-trivial projects.

Nevertheless, it is a starting point for practitioners when getting familiar with the technique.

Thankfully, more sophisticated GAN generator evaluation methods have been proposed and adopted.

For a thorough survey, see the 2018 paper titled “Pros and Cons of GAN Evaluation Measures.” This paper divides GAN generator model evaluation into qualitative and quantitative measures, and we will review some of them in the following sections using this division.

Qualitative GAN Generator Evaluation

Qualitative measures are those measures that are not numerical and often involve human subjective evaluation or evaluation via comparison.

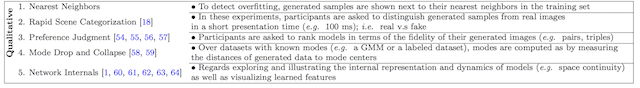

Five qualitative techniques for evaluating GAN generator models are listed below.

- Nearest Neighbors.

- Rapid Scene Categorization.

- Rating and Preference Judgment.

- Evaluating Mode Drop and Mode Collapse.

- Investigating and Visualizing the Internals of Networks.

Summary of Qualitative GAN Generator Evaluation Methods

Taken from: Pros and Cons of GAN Evaluation Measures.

Perhaps the most used qualitative GAN generator model is an extension of the manual inspection of images referred to as “Rating and Preference Judgment.”

These types of experiments ask subjects to rate models in terms of the fidelity of their generated images.

— Pros and Cons of GAN Evaluation Measures, 2018.

This is where human judges are asked to rank or compare examples of real and generated images from the domain.

The “Rapid Scene Categorization” method is generally the same, although images are presented to human judges for a very limited amount of time, such as a fraction of a second, and classified as real or fake.

Images are often presented in pairs and the human judge is asked which image they prefer, e.g. which image is more realistic. A score or rating is determined based on the number of times a specific model generated images on such tournaments. Variance in the judging is reduced by averaging the ratings across multiple different human judges.

This is a labor-intensive exercise, although costs can be lowered by using a crowdsourcing platform like Amazon’s Mechanical Turk, and efficiency can be increased by using a web interface.

One intuitive metric of performance can be obtained by having human annotators judge the visual quality of samples. We automate this process using Amazon Mechanical Turk […] using the web interface […] which we use to ask annotators to distinguish between generated data and real data.

— Improved Techniques for Training GANs, 2016.

A major downside of the approach is that the performance of human judges is not fixed and can improve over time. This is especially the case if they are given feedback, such as clues on how to detect generated images.

By learning from such feedback, annotators are better able to point out the flaws in generated images, giving a more pessimistic quality assessment.

— Improved Techniques for Training GANs, 2016.

Another popular approach for subjectively summarizing generator performance is “Nearest Neighbors.” This involves selecting examples of real images from the domain and locating one or more most similar generated images for comparison.

Distance measures, such as Euclidean distance between the image pixel data, is often used for selecting the most similar generated images.

The nearest neighbor approach is useful to give context for evaluating how realistic the generated images happen to be.

Quantitative GAN Generator Evaluation

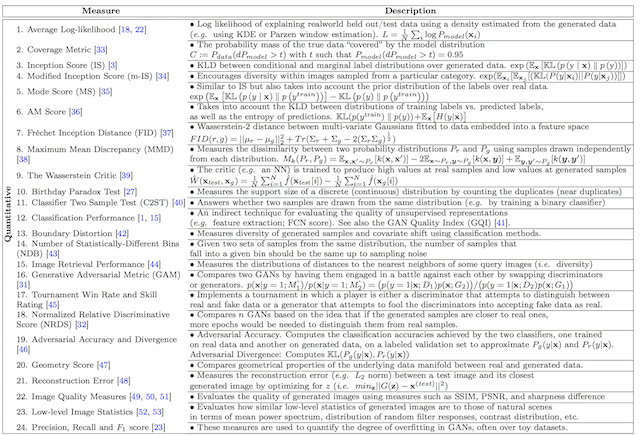

Quantitative GAN generator evaluation refers to the calculation of specific numerical scores used to summarize the quality of generated images.

Twenty-four quantitative techniques for evaluating GAN generator models are listed below.

- Average Log-likelihood

- Coverage Metric

- Inception Score (IS)

- Modified Inception Score (m-IS)

- Mode Score

- AM Score

- Frechet Inception Distance (FID)

- Maximum Mean Discrepancy (MMD)

- The Wasserstein Critic

- Birthday Paradox Test

- Classifier Two-sample Tests (C2ST)

- Classification Performance

- Boundary Distortion

- Number of Statistically-Different Bins (NDB)

- Image Retrieval Performance

- Generative Adversarial Metric (GAM)

- Tournament Win Rate and Skill Rating

- Normalized Relative Discriminative Score (NRDS)

- Adversarial Accuracy and Adversarial Divergence

- Geometry Score

- Reconstruction Error

- Image Quality Measures (SSIM, PSNR and Sharpness Difference)

- Low-level Image Statistics

- Precision, Recall and F1 Score

Summary of Quantitative GAN Generator Evaluation Methods

Taken from: Pros and Cons of GAN Evaluation Measures.

The original 2014 GAN paper by Goodfellow, et al. titled “Generative Adversarial Networks” used the “Average Log-likelihood” method, also referred to as kernel estimation or Parzen density estimation, to summarize the quality of the generated images.

This involves the challenging approach of estimating how well the generator captures the probability distribution of images in the domain and has generally been found not to be effective for evaluating GANs.

Parzen windows estimation of likelihood favors trivial models and is irrelevant to visual fidelity of samples. Further, it fails to approximate the true likelihood in high dimensional spaces or to rank models

— Pros and Cons of GAN Evaluation Measures, 2018.

Two widely adopted metrics for evaluating generated images are the Inception Score and the Frechet Inception Distance.

The inception score was proposed by Tim Salimans, et al. in their 2016 paper titled “Improved Techniques for Training GANs.”

Inception Score (IS) […] is perhaps the most widely adopted score for GAN evaluation.

— Pros and Cons of GAN Evaluation Measures, 2018.

Calculating the inception score involves using a pre-trained deep learning neural network model for image classification to classify the generated images. Specifically, the Inception v3 model described by Christian Szegedy, et al. in their 2015 paper titled “Rethinking the Inception Architecture for Computer Vision.” The reliance on the inception model gives the inception score its name.

A large number of generated images are classified using the model. Specifically, the probability of the image belonging to each class is predicted. The probabilities are then summarized in the score to both capture how much each image looks like a known class and how diverse the set of images are across the known classes.

A higher inception score indicates better-quality generated images.

The Frechet Inception Distance, or FID, score was proposed and used by Martin Heusel, et al. in their 2017 paper titled “GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium.” The score was proposed as an improvement over the existing Inception Score.

FID performs well in terms of discriminability, robustness and computational efficiency. […] It has been shown that FID is consistent with human judgments and is more robust to noise than IS.

— Pros and Cons of GAN Evaluation Measures, 2018.

Like the inception score, the FID score uses the inception v3 model. Specifically, the coding layer of the model (the last pooling layer prior to the output classification of images) is used to capture computer vision specific features of an input image. These activations are calculated for a collection of real and generated images.

The activations for each real and generated image are summarized as a multivariate Gaussian and the distance between these two distributions is then calculated using the Frechet distance, also called the Wasserstein-2 distance.

A lower FID score indicates more realistic images that match the statistical properties of real images.

Which GAN Evaluation Scheme to Use

When getting started, it is a good idea to start with the manual inspection of generated images in order to evaluate and select generator models.

- Manual Image Inspection

Developing GAN models is complex enough for beginners. Manual inspection can get you a long way while refining your model implementation and testing model configurations.

Once your confidence in developing GAN models improves, both the Inception Score and the Frechet Inception Distance can be used to quantitatively summarize the quality of generated images. There is no single best and agreed upon measure, although, these two measures come close.

As of yet, there is no consensus regarding the best score. Different scores assess various aspects of the image generation process, and it is unlikely that a single score can cover all aspects. Nevertheless, some measures seem more plausible than others (e.g. FID score).

— Pros and Cons of GAN Evaluation Measures, 2018.

These measures capture the quality and diversity of generated images, both alone (former) and compared to real images (latter) and are widely used.

- Inception Score

- Frechet Inception Distance

Both measures are easy to implement and calculate on batches of generated images. As such, the practice of systematically generating images and saving models during training can and should continue to be used to allow post-hoc model selection.

The nearest neighbor method can be used to qualitatively summarize generated images. Human-based ratings and preference judgments can also be used if needed via a crowdsourcing platform.

- Nearest Neighbors

- Rating and Preference Judgment

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

- Generative Adversarial Networks, 2014.

- Pros and Cons of GAN Evaluation Measures, 2018.

- Improved Techniques for Training GANs, 2016.

- GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium, 2017.

- Are GANs Created Equal? A Large-Scale Study, 2017.

Summary

In this post, you discovered techniques for evaluating generative adversarial network models based on generated synthetic images.

Specifically, you learned:

- There is no objective function used when training GAN generator models, meaning models must be evaluated using the quality of the generated synthetic images.

- Manual inspection of generated images is a good starting point when getting started.

- Quantitative measures, such as the inception score and the Frechet inception distance, can be combined with qualitative assessment to provide a robust assessment of GAN models.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

The post How to Evaluate Generative Adversarial Networks appeared first on Machine Learning Mastery.

Ai

via https://AIupNow.com

Jason Brownlee, Khareem Sudlow