Robotics technology is moving fast. A lot has happened since Microsoft announced an experimental release of Robot Operating System (ROS )[1] for Windows at last year’s ROSCON in Madrid. ROS support became generally available in May 2019, which enabled robots to take advantage of the worldwide Windows ecosystem—a rich device platform, world-class developer tools, integrated security, long-term support and a global partner network. In addition, we gave access to advanced Windows features like Windows Machine Learning and Vision Skills and provided connectivity to Microsoft Azure IoT cloud services.

)[1] for Windows at last year’s ROSCON in Madrid. ROS support became generally available in May 2019, which enabled robots to take advantage of the worldwide Windows ecosystem—a rich device platform, world-class developer tools, integrated security, long-term support and a global partner network. In addition, we gave access to advanced Windows features like Windows Machine Learning and Vision Skills and provided connectivity to Microsoft Azure IoT cloud services.

At this year’s ROSCON event in Macau, we are happy to announce that we’ve continued advancing our ROS capabilities with ROS/ROS2 support, Visual Studio Code extension for ROS and Azure VM ROS template support for testing and simulation. This makes it easier and faster for developers to create ROS solutions to keep up with current technology and customer needs. We look forward to adding robots to the 900 million devices running on Windows 10 worldwide.

Visual Studio Code extension for ROS

In July, Microsoft published a preview of the VS Code extension for ROS based on a community-implemented release. Since then we’ve been expanding its functionality—adding support for Windows, debugging and visualization to enable easier development for ROS solutions. The extension supports:

- Automatic environment configuration for ROS development

- Starting, stopping and monitoring of ROS runtime status

- Automatic discovery of build tasks

- One-click ROS package creation

- Shortcuts for rosrun and roslaunch

- Linux ROS development

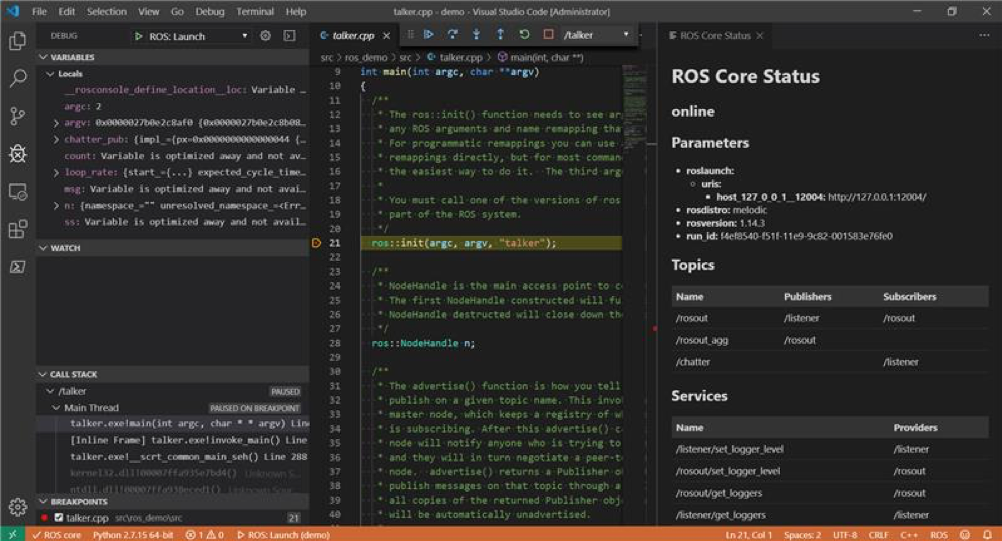

In addition, the extension adds support for debugging a ROS node leveraging the C++ and Python extensions. Currently in VS Code, developers can create a debug configuration for ROS to attach to a ROS node for debugging. In the October release, we are pleased to announce that the extension supports debugging ROS nodes launched from roslaunch at ROS startup.

Visual Studio Code extension for ROS showing ROS core status and debugging experience for roslaunch.

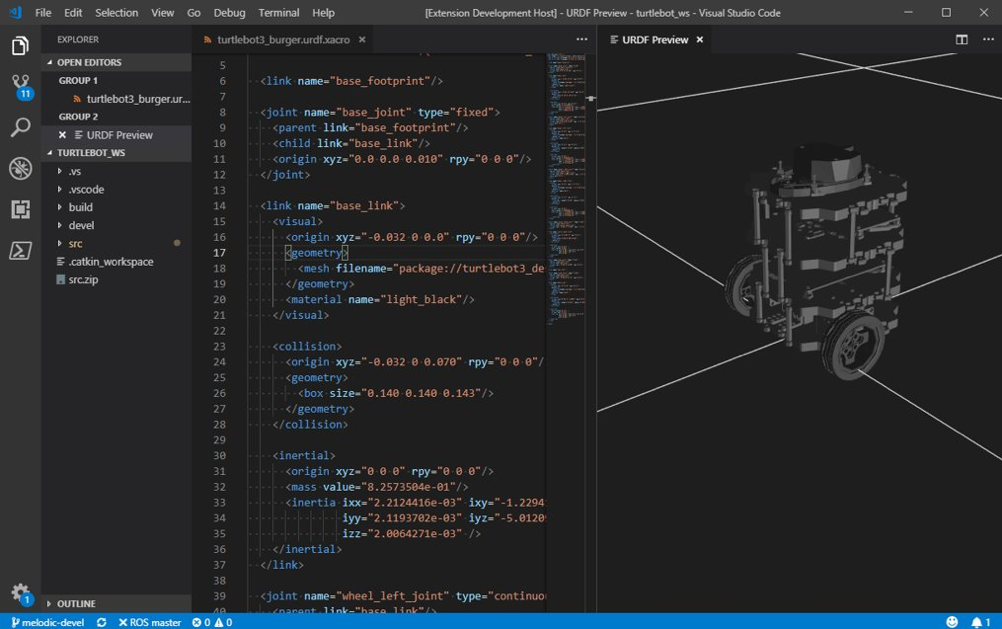

Unified Robot Description Format (URDF) is an XML format for representing a robot model, and Xacro is an XML macro language to simplify URDF files. The extension integrates support to preview a URDF/Xacro file leveraging the Robot Web Tools, which helps ROS developers easily make edits and instantly visualize the changes in VS Code.

Visual Studio Code extension for ROS showing a preview of URDF.

For developers who are building ROS2 applications, the extension introduces ROS2 support including workspace discovery, runtime status monitor and built tool integration. We’d like to provide a consistent developer experience for both ROS and ROS2 and will continue to expand support based on community feedback.

ROS on Windows VM template in Azure

With the move to the cloud, many developers have adopted agile development methods. They often want to deploy their applications to the cloud for testing and simulation scenarios when their development is complete. They iterate quickly and repeatedly deploy their solutions to the cloud. Azure Resource Manager template is a JavaScript Object Notation (JSON) file that defines the infrastructure and configuration for a project. To facilitate the cloud-based testing and deployment flow, we publish a ROS on Windows VM template that creates a Windows VM and installs the latest ROS on Windows build into the VM using the CustomScript extension. You can try it out here.

Expanding ROS and ROS2 support

Microsoft is expanding support for ROS and ROS2, including creating Microsoft-supported ROS nodes and building and providing Chocolatey packages for the next releases of ROS (Noetic Ninjemys) and ROS2 (Eloquent Elusor).

Azure Kinect ROS Driver

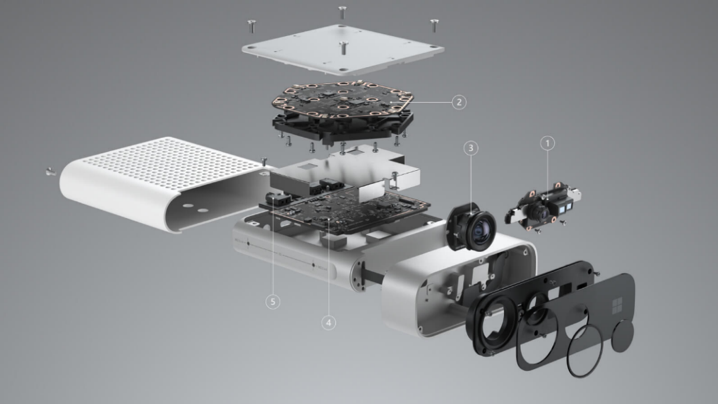

Internal visualization of the Azure Kinect.

The Azure Kinect Developer Kit is the latest Kinect sensor from Microsoft. The Azure Kinect contains the same depth sensor used in the Hololens 2, as well as a 4K camera, a hardware-synchronized accelerometer & gyroscope (IMU), and a 7-element microphone array. Along with the hardware release, Microsoft made available a ROS node for driving the Azure Kinect and soon will support ROS2.

The Azure Kinect ROS Node emits a PointCloud2 stream, which includes depth information and color information, along with depth images, the raw image data from both the IR & RGB cameras and high-rate IMU data.

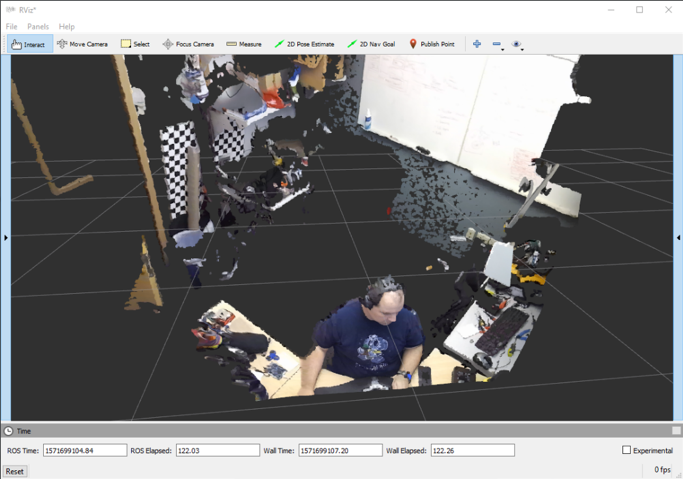

Colorized Pointcloud output of Azure Kinect in the tool rViz.

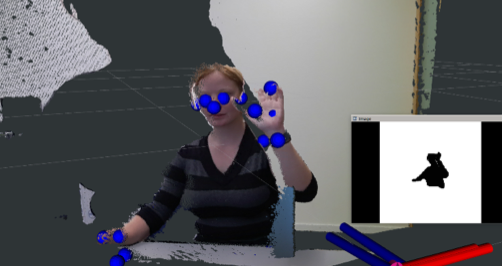

A Community contribution has also enabled body tracking! This links to the Azure Kinect Body Tracking SDK and outputs image masks of each tracked individual and poses of body tracking joints as markers.

A visualization of Skeletal Tracking in rViz.

You can order a Azure Kinect DK at the Microsoft Store, then get started using the Azure Kinect ROS node here.

Windows ML Tracking ROS Node

The Windows Machine Learning API enables developers to use pre-trained machine learning models in their apps on Windows 10 devices. This offers developers several benefits:

- Low latency, real-time results: Windows can perform AI evaluation tasks using the local processing capabilities of the PC with hardware acceleration using any DirectX 12 GPU. This enables real-time analysis of large local data, such as images and video. Results can be delivered quickly and efficiently for use in performance intensive workloads like game engines, or background tasks such as indexing for search.

- Reduced operational costs: Together with the Microsoft Cloud AI platform, developers can build affordable, end-to-end AI solutions that combine training models in Azure with deployment to Windows devices for evaluation. Significant savings can be realized by reducing or eliminating costs associated with bandwidth due to ingestion of large data sets, such as camera footage or sensor telemetry. Complex workloads can be processed in real-time on the edge with minimal sample data sent to the cloud for improved training on observations.

- Flexibility: Developers can choose to perform AI tasks on device or in the cloud based on what their customers and scenarios need. AI processing can happen on the device if it becomes disconnected, or in scenarios where data cannot be sent to the cloud due to cost, size, policy or customer preference.

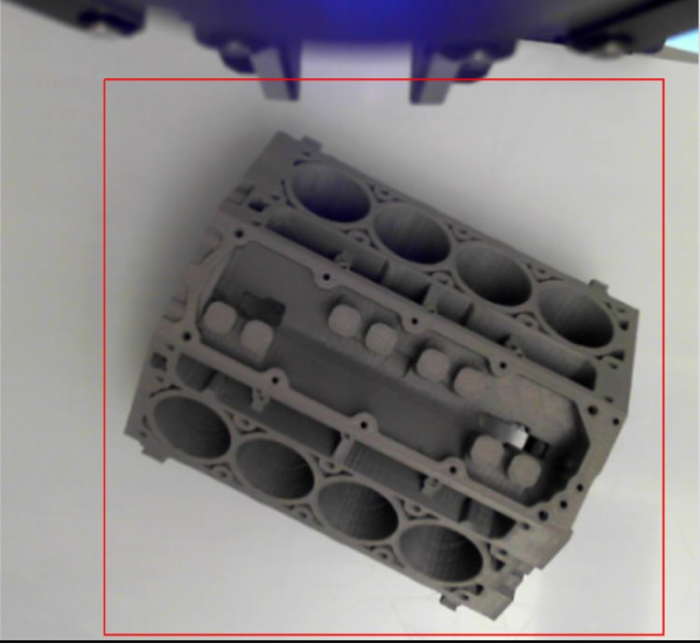

The Windows Machine Learning ROS node will hardware accelerate the inferencing of your Machine Learning models, publishing a visualization marker relative to the frame of image publisher. The output of Windows ML can be used for obstacle avoidance, docking or manipulation.

Visualizing the output of a model with Windows ML. Model used with permission: www.thingiverse.com/thing:1911808.

Azure IoT Hub ROS Node

Enable highly secure and reliable communication between your IoT application and the devices it manages. Azure IoT Hub provides a cloud-hosted solution backend to connect virtually any device. Extend your solution from the cloud to the edge with per-device authentication, built-in device management and scaled provisioning.

The Azure IoT Hub ROS Node allows you to stream ROS Messages through Azure IoT Hub. These messages can be processed with an Azure Function, streamed to a Blob Store or processed through Azure stream analytics for anomaly detection. Additionally, the Azure IoT Hub ROS Node allows you to change properties in the ROS Parameter server using Dynamic Reconfigure with properties set on the Azure IoT Hub Device Twin.

Come learn more and see some of these technologies in action at ROSCON 2019 in Macau. We’re hosting a booth throughout the event (October 31 – November 1), as well as a talk on Friday afternoon. You can get started with ROS on Windows here.

[1] ROS is a trademark of Open Robotics

The post Windows expands support for robots appeared first on Windows Blog.

Microsoft

via https://www.aiupnow.com

Lily Hou, Khareem Sudlow